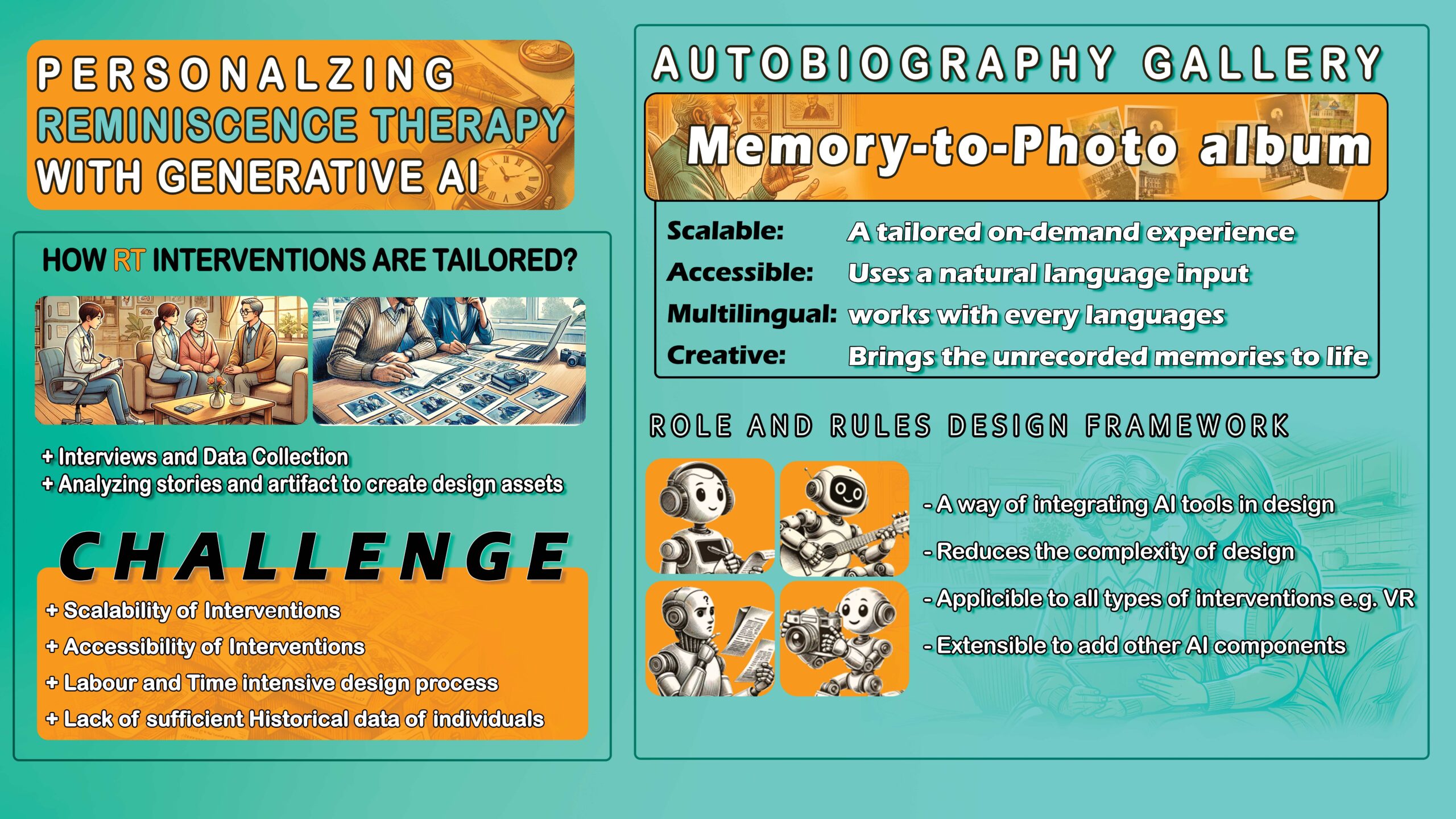

This article chronicles my journey as a master’s student at the University of Calgary, exploring the intersection of generative AI, virtual reality (VR), and human-computer interaction (HCI) to create innovative tools for dementia care. During the 2.5 years of academic study, Starting from early VR prototypes, I refined the system into a scalable web application that transforms memory descriptions into personalized photo albums. Through iterations incorporating AI models for image generation, speech processing, and interactive engagement, this project evolved to address key challenges like data collection, cultural representation, and accessibility. Join me as I share the technical breakthroughs, design decisions, and future possibilities of AI-driven dementia care interventions

Note: This study has been submitted for peer reiview as a conference paper

Setting the stage

When I started my master’s at the University of Calgary in Fall 2022 under the supervision of Dr. Mohammad Moshirpour, I joined the Memory Lane Project, an initiative spearheaded by my co-supervisor, Dr. Linda Duffett-Leger. The goal was ambitious: use virtual reality (VR) to recreate personalized environments that could help people living with dementia (PLWD) reconnect with their memories. This application would adhere to Reminiscence Therapy (RT) framework to improve PLWD’s quality of life. Think digital replicas of childhood homes or cherished family gatherings—powerful in theory, but faced with real-world hurdles.

Reminiscence Therapy (RT): A life sharing experiences, memories and stories from the past. Where a person with dementia is more able to recall things from many years ago than recent memories; reminiscence draws on this strength. Objects, video clips, photos as well as sounds and smells can be helpful in reminiscence, invoking memories and stories that give both comfort and stimulation. (cite: https://www.alzscot.org/)

However, turning this vision into reality proved more challenging than anticipated. The Memory Lane Project faced significant data collection challenges. First, family caregivers, already overwhelmed by daily responsibilities, struggled to find time for lengthy interviews or to search for old photos. Second, dementia’s progression meant some participants declined to stages where they could no longer engage with the intervention, making it difficult to collect or validate memories. Finally, some memories had no recorded data—photos, videos, or other artifacts—leaving us with incomplete or nonexistent records to work with. These challenges forced us to rethink our approach. By Spring 2023, generative AI was rapidly advancing, with tools like GPT-3.5 and DALL-E 2 making waves in HCI research. While there wasn’t yet a tool for generating real-time VR environments, I began to wonder: What if we could create a 2D version of Memory Lane—a system that generates on-demand RT experiences without relying on pre-existing data? Something that’s in a more managable scope for me to finish my Master’s program.

This idea sparked the metaphor of a time-traveling photographer: an AI that could “travel back in time” to capture moments from memory descriptions, even if no physical photos existed. Instead of relying on old albums or fragmented records, users could simply describe a memory—like a childhood picnic or a family holiday—and the system would generate a photo album of that moment. This became the foundation of the Autobiography Gallery, a generative AI-powered tool designed to create personalized, on-demand RT experiences.

Starting Development of Autobiogarphy Gallery

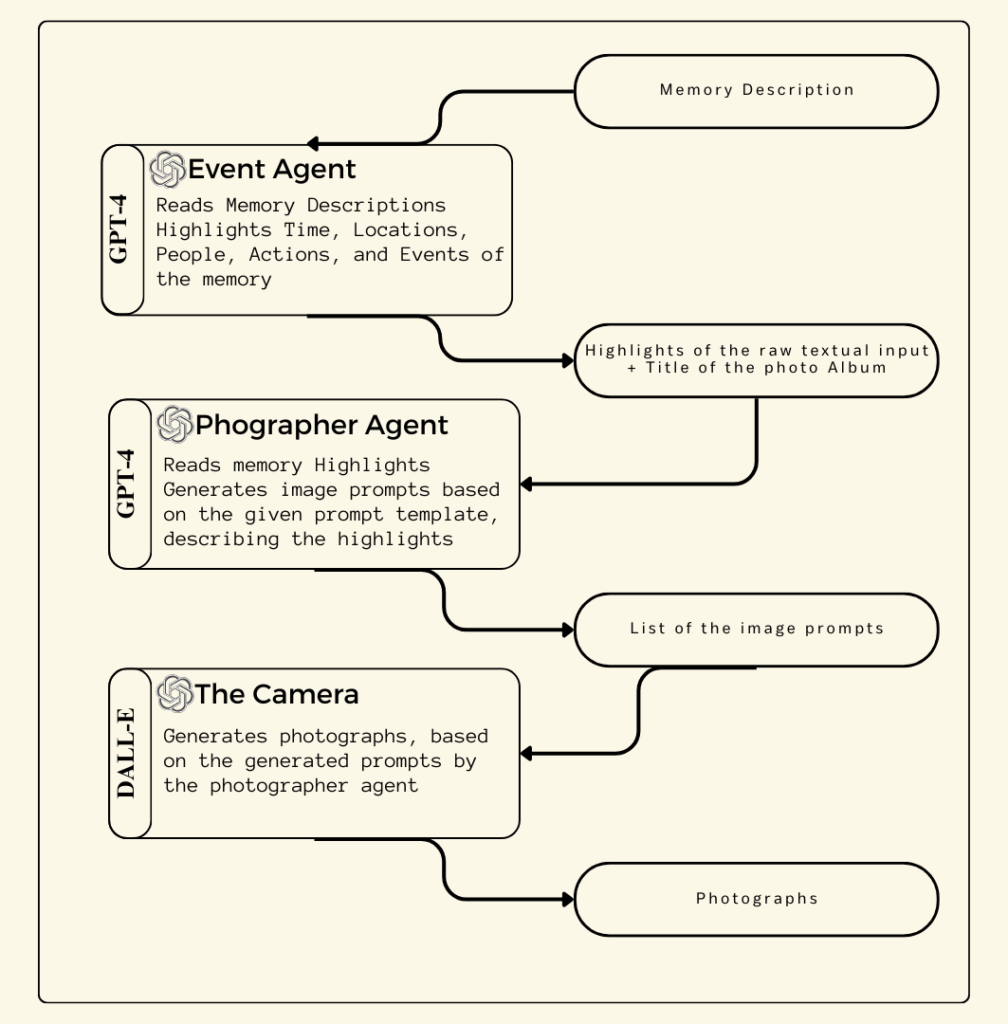

The development of the Autobiography Gallery began with a rough prototype built atop an existing VR project in Unity, leveraging the engine’s flexibility to test early concepts. During this phase, I designed a custom multi-agent system called the Role and Rules Framework, where each AI agent had distinct responsibilities (e.g., parsing memories, structuring prompts) and followed predefined collaboration rules. This framework ensured seamless interaction between components like GPT-3.5, which transformed user memories into structured descriptions (e.g., converting “my sister’s wedding in 1990” into “outdoor ceremony, floral arch, 1990s bridal gown, champagne toast”), and DALL-E 2, which generated initial visual representations.

By Summer 2023, iterative testing led to Version 1 of the system. However, DALL-E 2’s limitations became glaring: human faces often appeared blurry or distorted, weakening emotional resonance, and scenes included anachronisms like modern clothing in historical settings. For instance, generation of unnaturally smooth human faces. Additionally, while the Role and Rules Framework demonstrated promise, the late-2023 emergence of advanced agent frameworks like Microsoft’s AutoGen rendered our custom system redundant. To avoid novelty distractions, I scrapped the framework, focusing instead on refining the core memory-to-image pipeline.

Early prototype of Autobiography Gallery. While the system successfully generated memory-based images, DALL-E 2’s limitations resulted in uncanny, distorted facial features and inconsistent realism. However, early user studies showed potential for stimulating memory recall, demonstrating the promise of AI-generated reminiscence therapy.

Version 1 system architecture of Autobiography Gallery, designed using the Role and Rules Framework. Each AI agent operates within a defined role—Event Agent extracts key memory elements, Photographer Agent structures image prompts, and The Camera (DALL-E) generates final visuals—while adhering to structured interaction rules.

This phase validated the concept but underscored a critical gap: without photorealistic details and accurate human depictions, the therapeutic potential remained limited. It set the stage for integrating DALL-E 3, which would later resolve these issues, transforming vague approximations into emotionally resonant snapshots.

Transformational Leap with DALL-E 3

The introduction of DALL-E 3 in October 2023 marked a pivotal shift for the Autobiography Gallery. Where DALL-E 2 often produced flat or disjointed scenes—imagine architecture students in 1950s England rendered as vague silhouettes in a poorly lit studio—DALL-E 3 captured intricate details: the texture of drafting paper, the cut of mid-century suits, and the warm glow of afternoon light filtering through workshop windows.

Critically, DALL-E 3’s ability to process richer, more nuanced descriptions allowed us to refine our system’s focus on emotional authenticity. For instance, while DALL-E 2 struggled to depict human expressions beyond generic neutrality, DALL-E 3 could render subtle cues—like a student’s faint smile as they sketched a blueprint—transforming sterile visuals into emotionally resonant snapshots. This shift wasn’t just technical; it was deeply human. A simple smile in a generated image could evoke nostalgia, bridging the gap between AI output and lived experience.

By late 2023, these advancements enabled the Autobiography Gallery to move beyond experimental prototypes, offering families and caregivers a tool that felt less like a technical novelty and more like a window into the past.

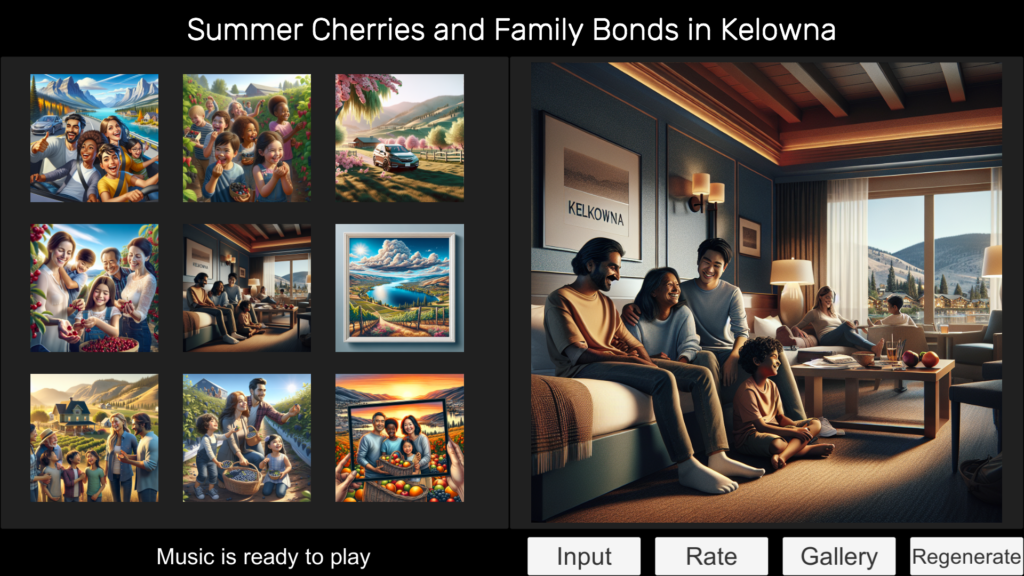

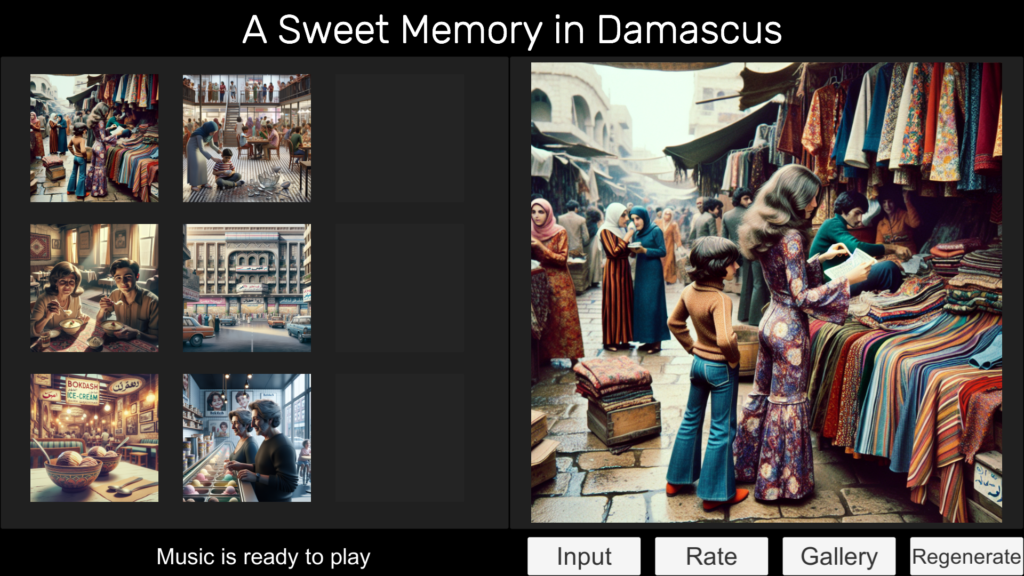

Version 2 of Autobiography Gallery, powered by DALL-E 3, showcasing a more realistic and emotionally engaging AI-generated memory album. This iteration significantly improves facial accuracy, scene coherence, and detail richness, enhancing the reminiscence therapy experience.

Expanding the AI System: Multisensory Enhancements and New Agents

Building on DALL-E 3’s success, we expanded the Autobiography Gallery’s capabilities to create richer, multisensory experiences. Central to this upgrade was GPT-4o, which replaced GPT-3.5 as the core conversational agent. Its advanced reasoning allowed the system to infer unspoken details—like the warmth of a 1950s English architecture studio from a simple phrase such as “my late-night drafting sessions”—transforming sparse descriptions into vivid, emotionally layered prompts. To further democratize access, we integrated OpenAI’s Whisper, enabling users to narrate memories in their native languages. A grandmother recounting her childhood in Mandarin or a caregiver describing a Syrian wedding in Arabic could now have their stories transcribed and processed seamlessly, breaking down language barriers that once limited participation.

We also explored Suno AI v3 to generate era-specific music themes, envisioning a soundtrack that could amplify emotional resonance. For example, a memory of a 1960s dance hall might pair generated images with period-appropriate jazz. However, practical challenges arose:

- Technical Hurdles: Suno lacked API access, forcing us to manually scrape its web interface using personal accounts—a brittle, unsustainable approach.

- Research Integrity: Introducing music risked biasing user evaluations of the core image-generation feature, muddying study results.

- Scalability: Without robust API integration, scaling music generation across users was impossible.

The background music—a soothing instrumental with Arabic-themed instruments, including the oud and qanun—enhances the emotional depth of the reminiscence process. This multi-sensory approach aims to evoke nostalgic emotions, further immersing users in their cherished memories.

Though promising, the music component was ultimately scrapped to maintain focus on refining the photo album’s visual and emotional fidelity. This streamlined approach ensured the Autobiography Gallery remained a reliable, scalable tool—one that transformed fleeting memories into enduring visual narratives, no matter how fragmented the past might seem.

Exploring Immersive Experiences

Building on this success, I explored how the Autobiography Gallery’s framework could extend into 3D and VR environments. The goal was to create an immersive space where users could interact with their AI-generated memories in new ways. A VR prototype was developed, enabling users to:

- Decorate virtual rooms with AI-generated photographs, such as placing framed images on digital tables or hanging them on virtual walls.

- Navigate a personalized gallery where memories were displayed as a chronological journey.

A demo showcased this vision: AI-generated photo frames populated a serene virtual gallery, with users able to “walk through” their memories. For example, a memory described as “family gatherings at home” might fill the space with images of holiday dinners, birthdays, or quiet moments in a living room.

Autobiography Gallery VR – Memory Intake Demo

Autobiography Gallery VR – Gallery Experience

Immersive View – Gallery Experience

While the VR prototype demonstrated feasibility, feedback from stakeholders emphasized prioritizing the 2D system for its accessibility and ease of use. The immersive VR experience remains a promising future direction, contingent on broader access to VR hardware and advancements in real-time AI rendering. For now, the Autobiography Gallery’s core strength lies in its ability to transform fleeting memories into tangible, emotionally resonant photographs—bridging the gap between past and present with simplicity and care.

Refinements and Transition to AWS

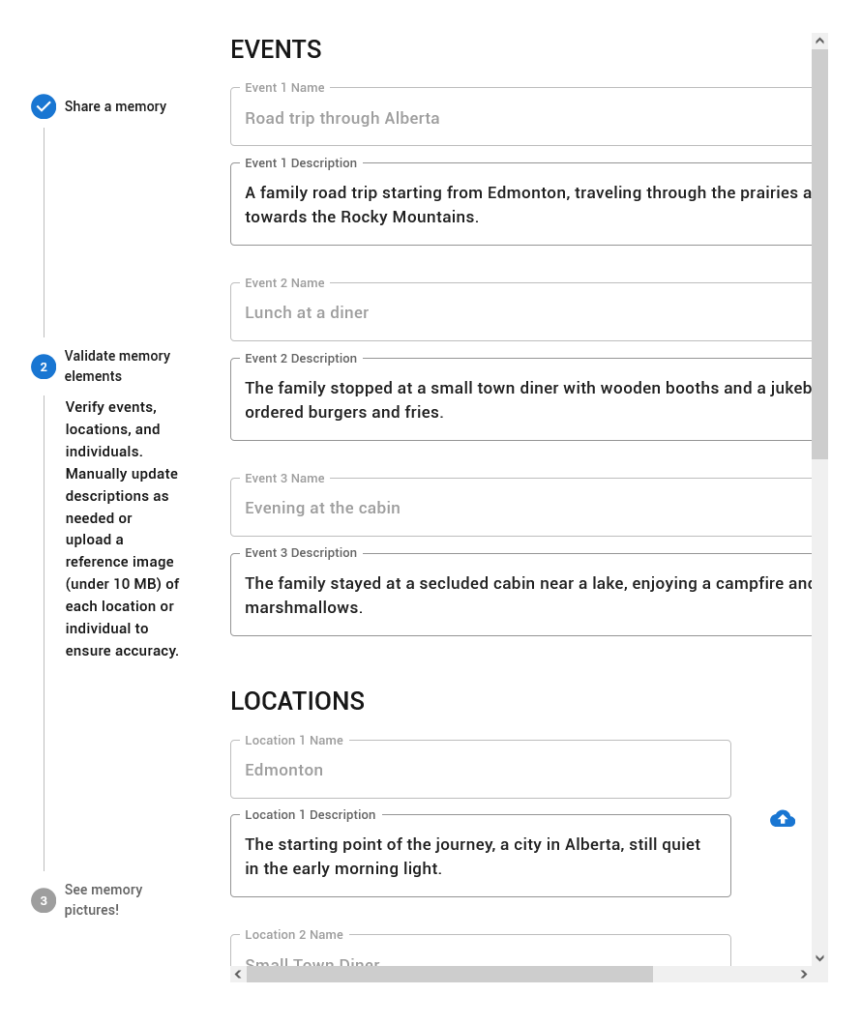

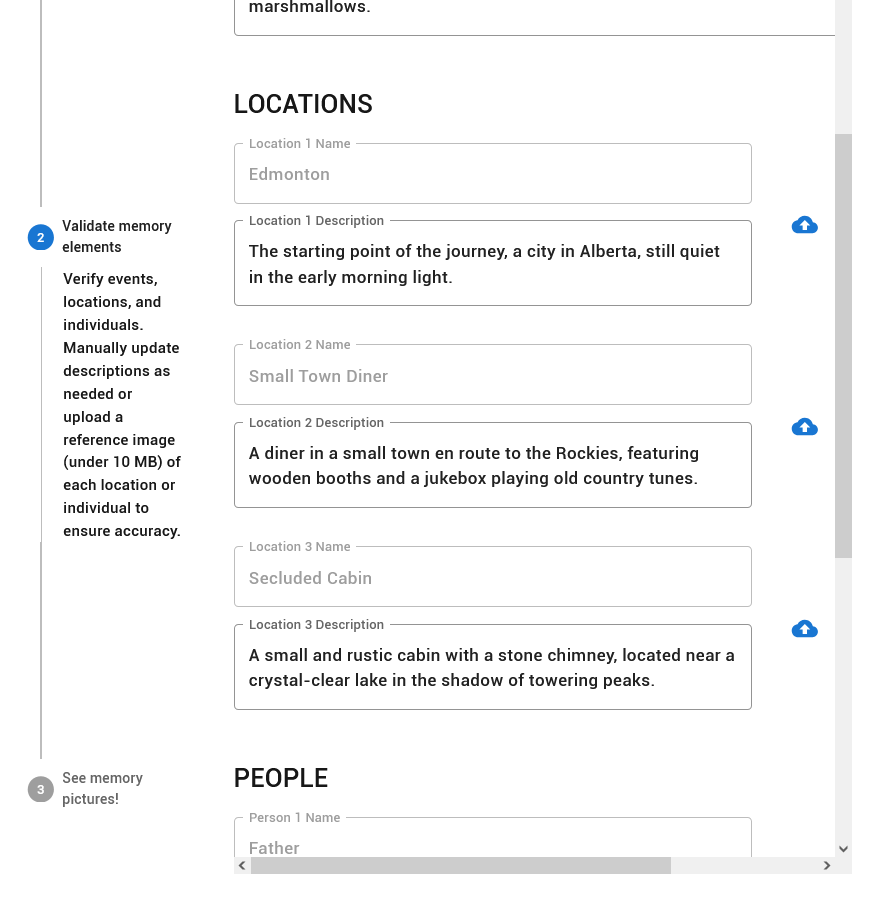

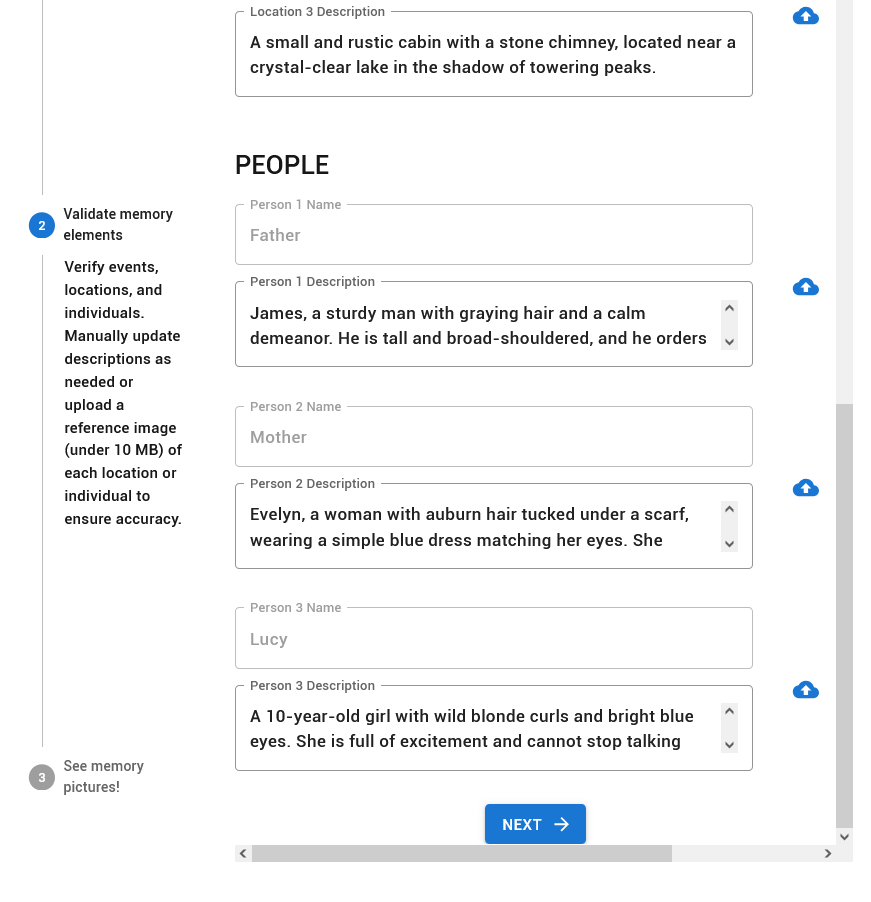

Following insights from focus groups and dyadic user studies, we identified three key refinement points to enhance the Autobiography Gallery’s effectiveness. These refinements were later integrated into a scalable web-based application, finalized in Fall 2024, to address accessibility and usability challenges.

Refinement Points and Solutions

- Increasing Accuracy:

- Challenge: Participants emphasized the need for images to align with cultural and personal details (e.g., traditional clothing, historical settings).

- Solution: We introduced the Elements Flow feature, allowing caregivers to refine AI-generated prompts by adjusting details like locations, time periods, or clothing styles. For example, a caregiver could edit a prompt from “a family gathering” to “a 1970s Syrian family gathering with embroidered tablecloths and brass coffee pots.”

- Enhancing Engagement:

- Challenge: Sustaining user interest required structured interaction.

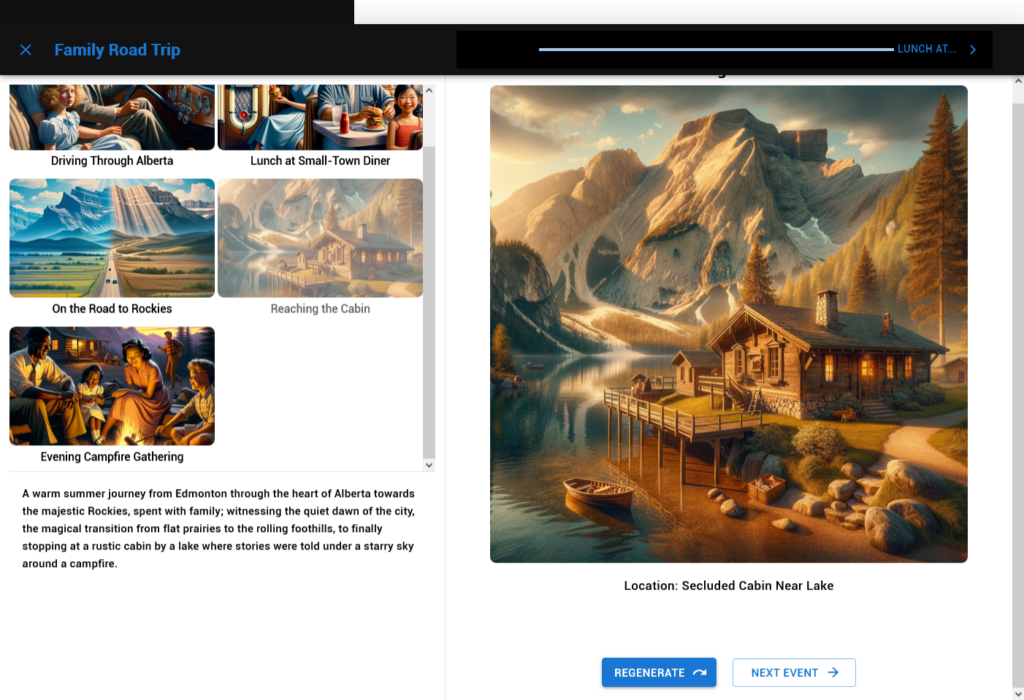

- Solution: The Event Timeline organized memories chronologically, transforming photo albums into guided journeys. Users could navigate memories like chapters in a book, fostering a sense of progression and discovery.

- Prompting Communication:

- Challenge: Caregivers needed tools to spark meaningful conversations.

- Solution: The Image Story feature embedded metadata (e.g., “This was your sister’s wedding in Damascus, 1982”) and auto-generated captions, serving as conversation starters.

Transition to a Web-Based Platform

To scale the intervention, we migrated from a Unity prototype to a web application using:

- Backend: A Python Flask server handling AI integrations (Whisper, GPT-4, DALL-E 3). Serving APIs deployed on a VPS – AWS EC2 service.

- Frontend: A Next.js interface designed collaboratively with a research associate, prioritizing accessibility for older adults and caregivers.

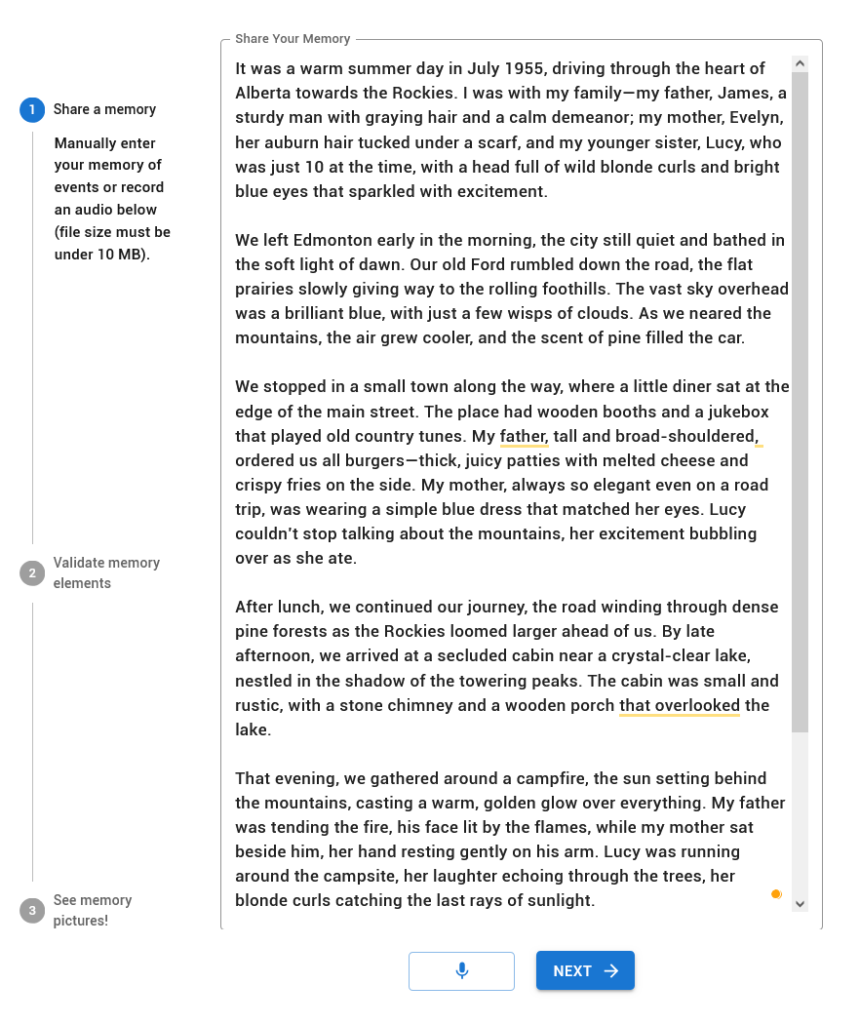

The web app streamlined the user experience:

- Voice Input: Whisper processed multilingual memory descriptions directly in-browser.

- Real-Time Editing: Caregivers could tweak prompts via the Elements Flow interface.

- Multi-Device Access: Families could view albums on tablets, laptops, or shared screens in care facilities.

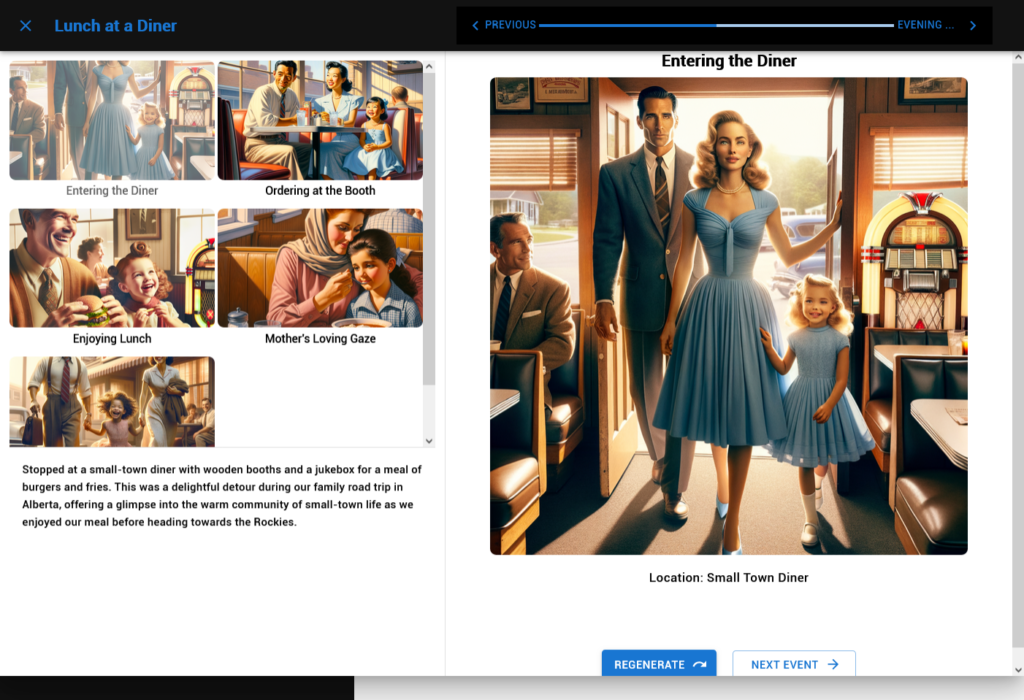

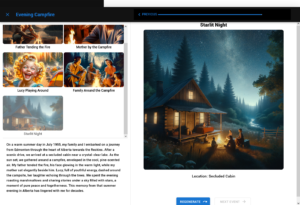

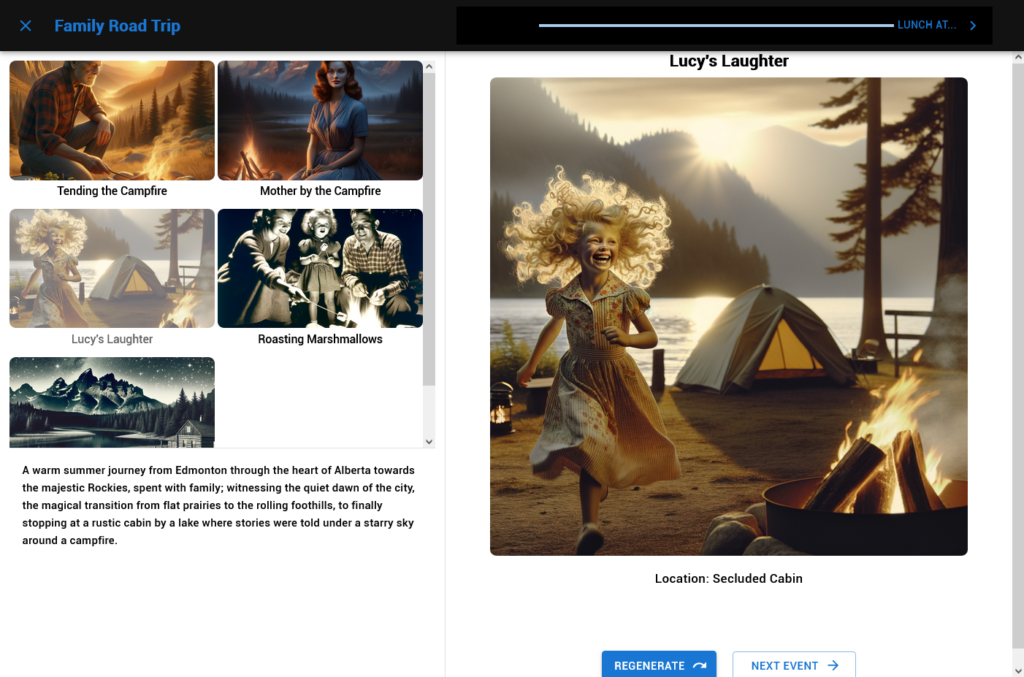

Refined Autobiography Gallery Web app

Gallery below includes screenshots of the Memory-to-photo album process on the web app. This refined version of the Autobiography Gallery introduces key improvements in image accuracy, engagement, and communication. With enhanced element flow and support for reference images, users can generate more precise visual memories. The new Event Timeline feature fosters deeper engagement, allowing users to relive their past in structured sequences. Additionally, embedded image metadata and story descriptions provide rich context, transforming each AI-generated photo into a meaningful narrative.

Leave a Reply